This project consists of two parts: The first part consists of experiments with two different filtering approaches (Finite Difference Operator and Derivative of Gaussian Filter). The second part consists of a series of image transformation techniques through manipulating the frequencies of different images.

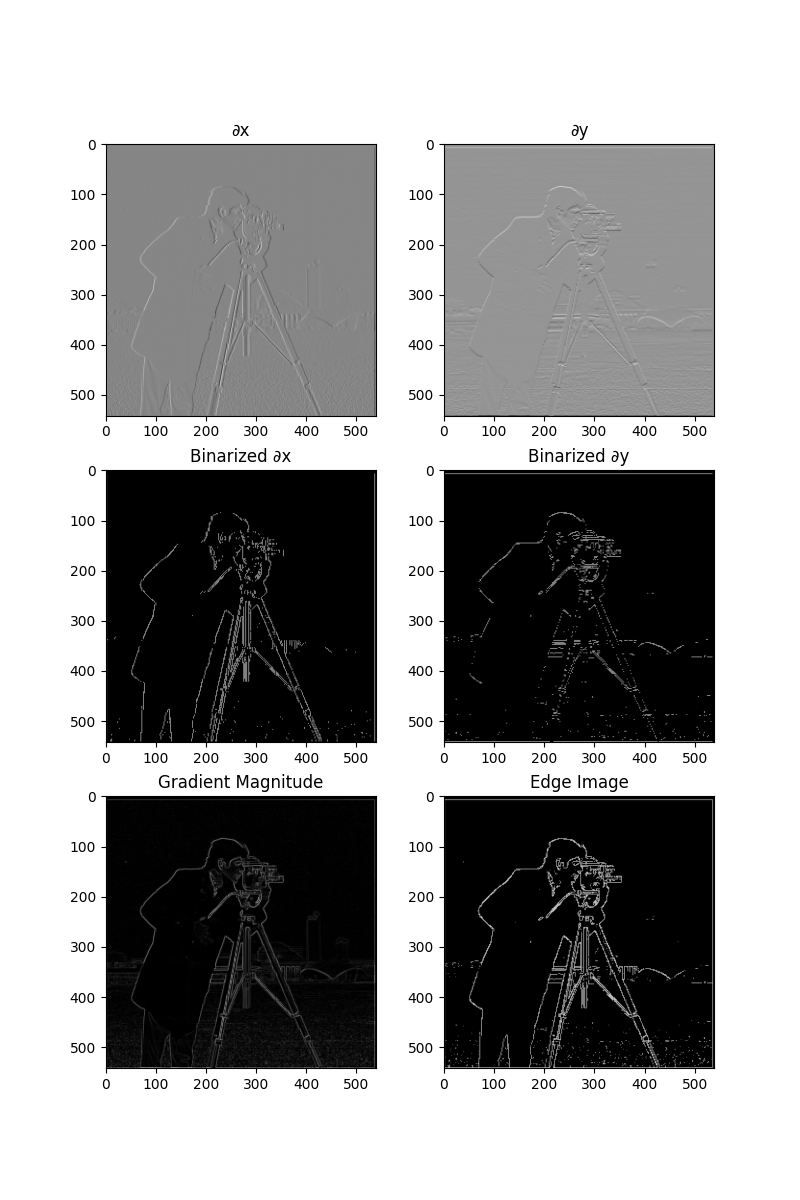

In this section, we compute the partial derivatives in the x and y directions of the cameraman image using finite difference operators Dx and Dy, then apply convolution with these operators using scipy.signal.convolve2d. The gradient magnitude image is calculated by combining the partial derivatives. To obtain the result, we binarize the gradient magnitude image by selecting an appropriate threshold, which is chosen qualitatively to balance noise suppression while highlighting real edges.

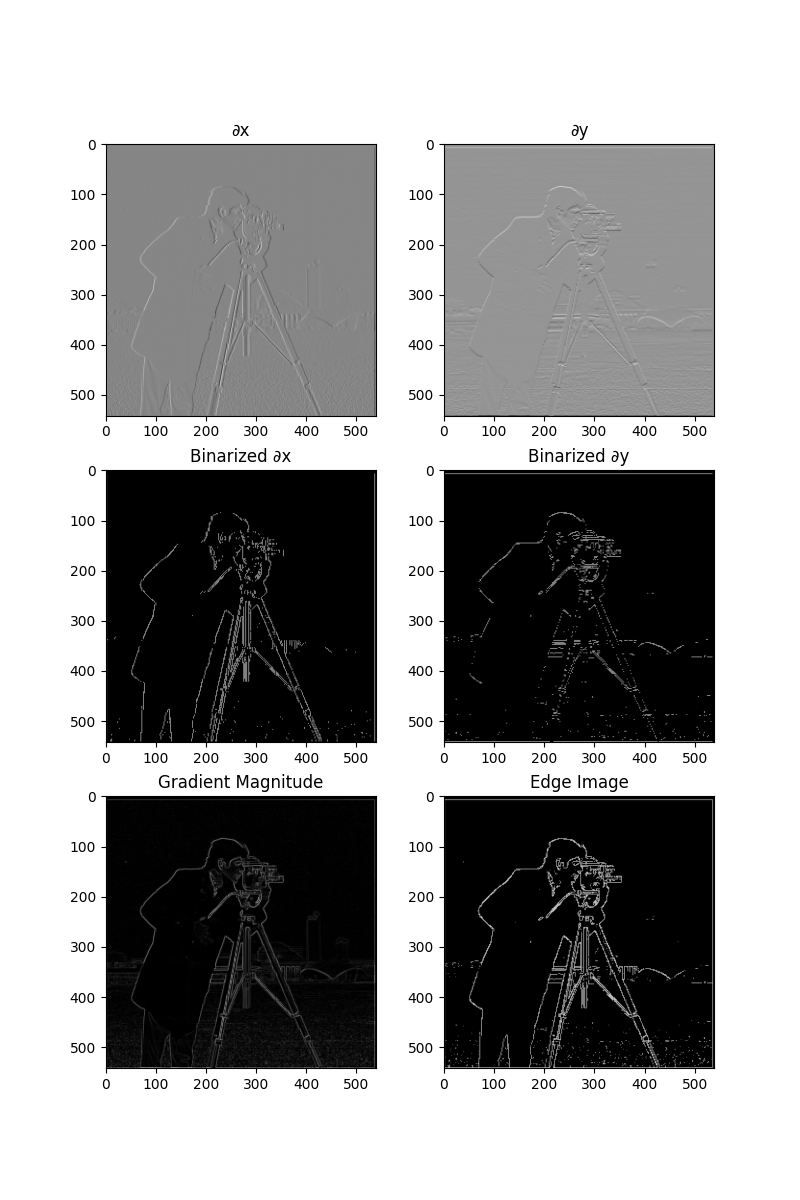

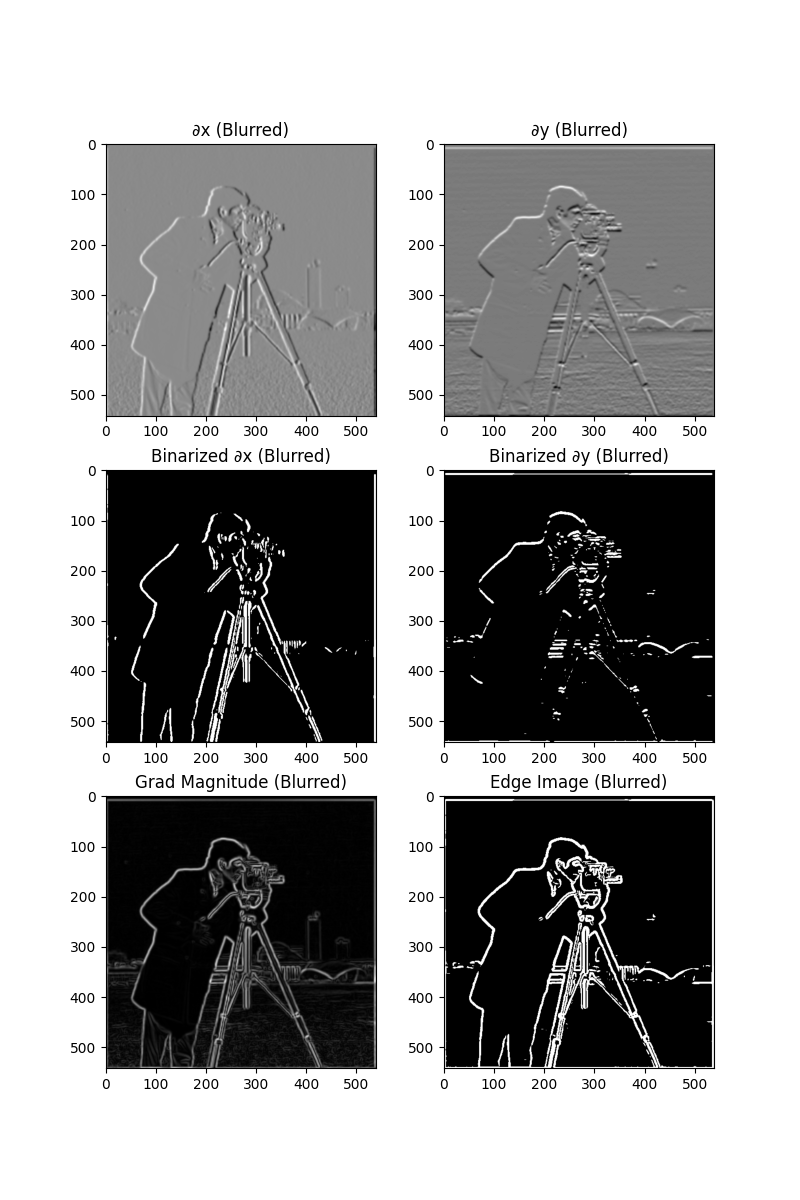

The result from the previous section wasn't ideal. One way to improve this is to apply a Gaussian blur filter G. The image is first blurred by convolving it with a 2D Gaussian kernel to create a 1D Gaussian, and then using an outer product to produce the 2D version. After smoothing, we repeat the procedure of computing partial derivatives and the gradient magnitude. Here, instead of performing two separate convolutions, we create derivative of Gaussian (DoG) filters by convolving the Gaussian filter with Dx and Dy. The resulting DoG filters are then used to perform a single convolution for edge detection, producing results with smoother edges and less noise.

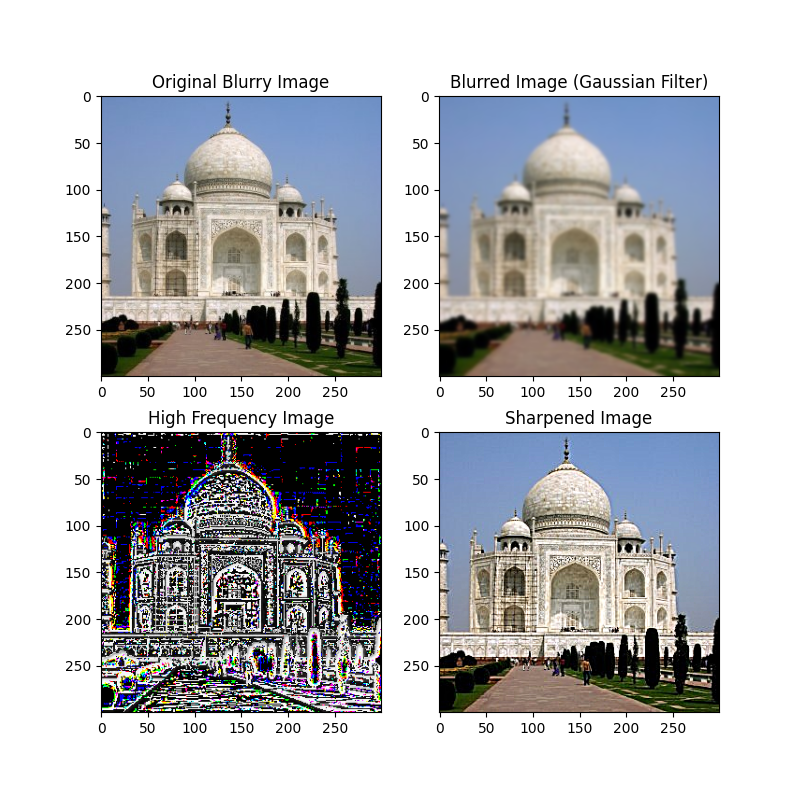

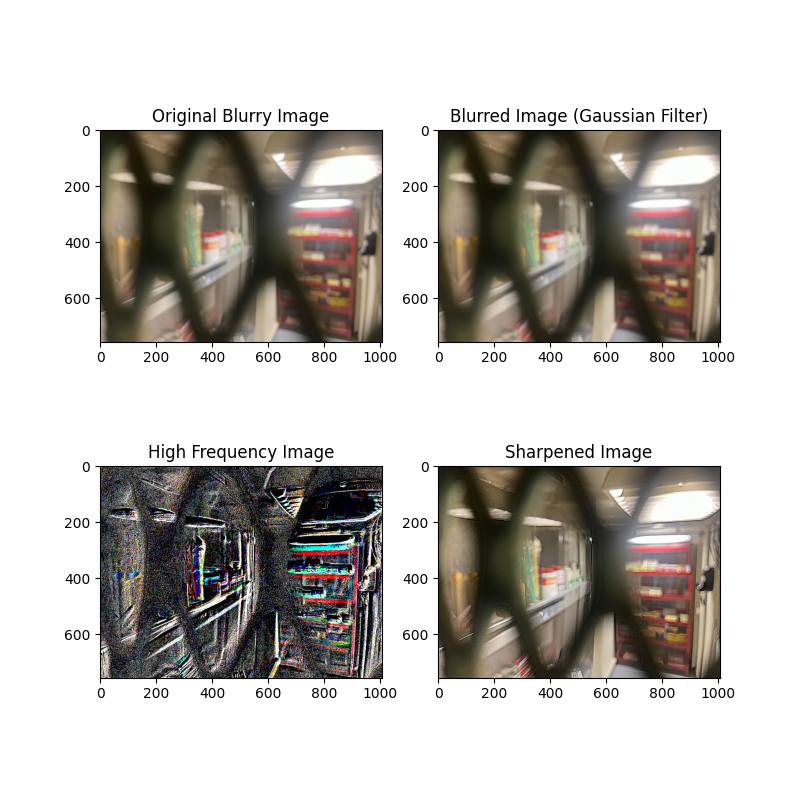

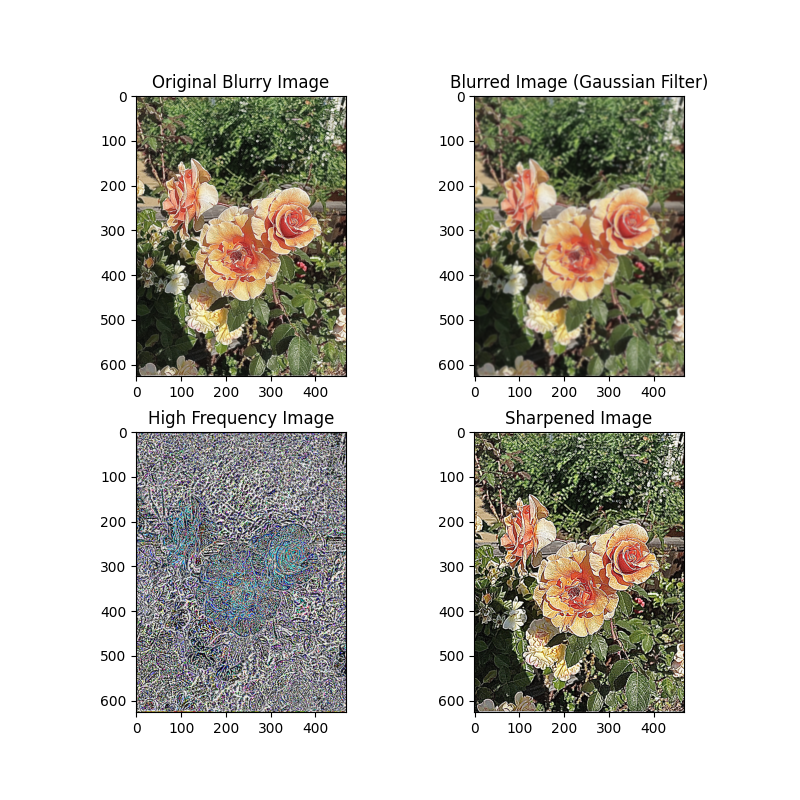

In this section, we use the unsharp masking technique to sharpen a blurry image. First, a Gaussian filter is applied to the image to produce a blurred version, which captures the low-frequency components. The high-frequency details are then obtained from subtracting the blurred image from the original. To enhance the sharpness, we add a scaled version of these high frequencies back to the original image. Although the image's resolution has not been improved, the sharpening effect nevertheless made the image look more "clear".

The fact that sharpening does not equate to increasing image resolution is evident in the second image - bars, which is blurry and only objects that were already clear to a certain extent like the cans inside have displayed noticeable improvements.

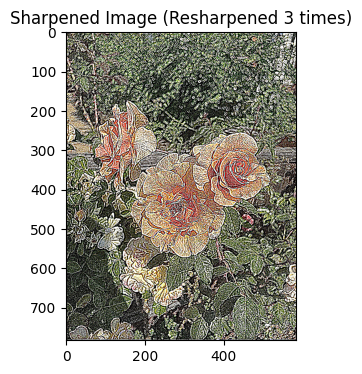

Furthermore, image resharpening can risk losing detail information. For the flower image below, which was already initially sharpened, appear to have bolder outlines. This effect gets increasingly apparent if we repeat the process a few more times as can be seen from the one below.

In this part, we create hybrid images that change their display based on the viewing distance (or how hard you squint), as described in the SIGGRAPH 2006 paper by Oliva, Torralba, and Schyns. Hybrid images are generated by blending the high-frequency content of one image with the low-frequency content of another. The high frequencies dominate when viewed up close, while only the low frequencies are perceived from a distance.

The process involves first aligning two input images, which is critical for proper perception. After alignment, we apply a low-pass filter (a 2D Gaussian filter) to one image to extract its smooth, low frequency components. For the second image, we apply a high-pass filter by subtracting its Gaussian-filtered version from the original to extract the high frequency details. The two filtered images are combined to produce the hybrid image.

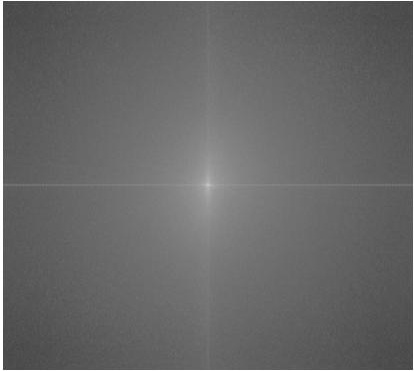

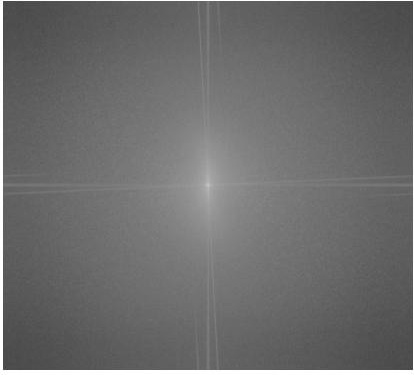

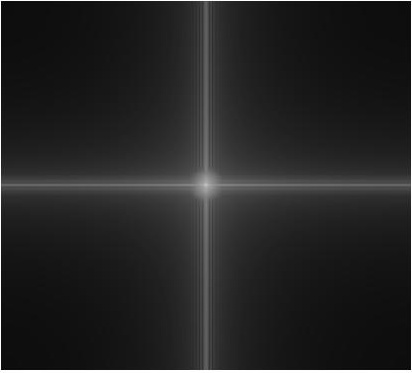

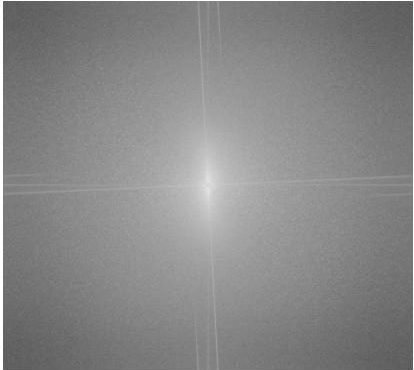

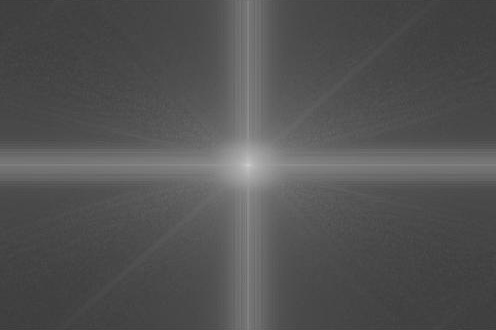

Below is the Fourier transform analysis conducted on the beach images to visualize the hybrid process. As we can see, after extracting the image's respective frequency, the plot appears more distinct in spectrum. This demonstrates the frequency distribution of the images and how they contribute to the hybrid effect.

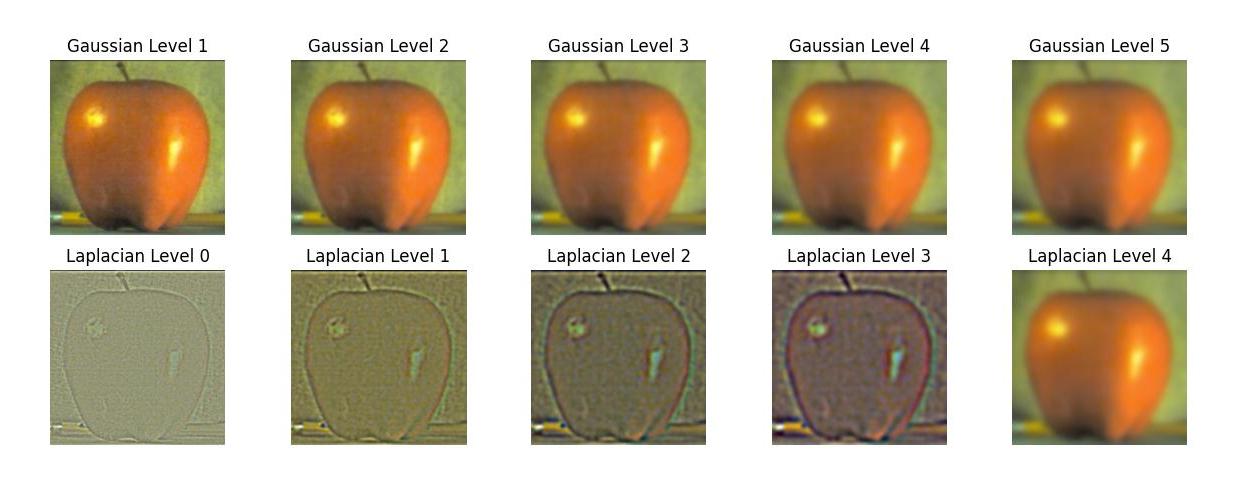

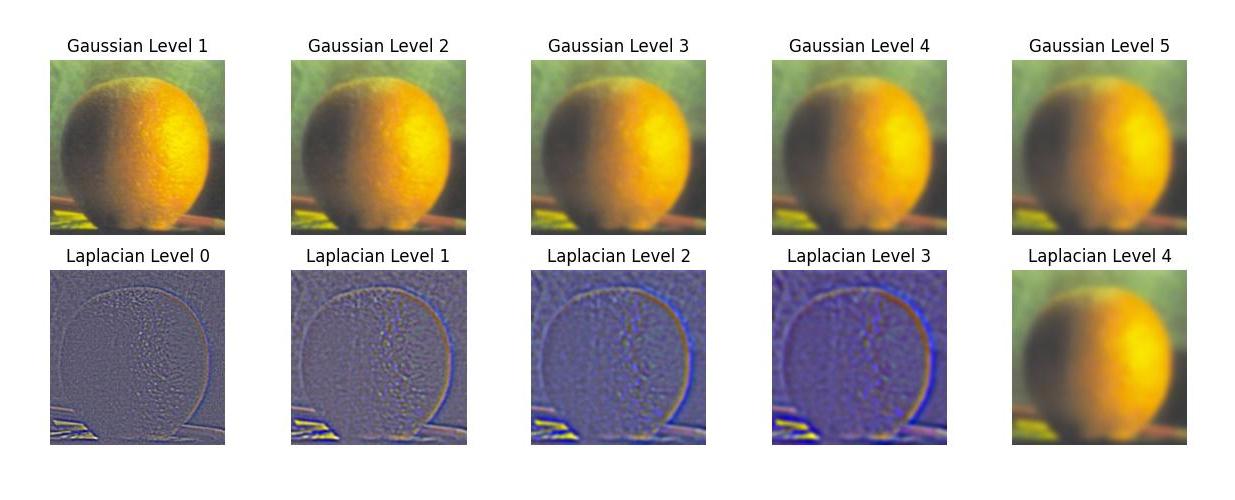

In this section, we implement Gaussian and Laplacian stacks to prepare for multi-resolution blending. Unlike pyramids that downsample the image as we traverse, the stacks here maintain the same image dimension at each level. The Gaussian stack applies the Gaussian filter at each level. The Laplacian stack is constructed by computing the difference between successive levels of the Gaussian stack, capturing the high-frequency details at each level. Both stacks are stored in a 3D matrix (for grayscale images) where each level corresponds to a different degree of smoothing or high-frequency detail extraction. These stacks allow us to blend images in the next section of the project.

The first row displays the gaussian stacks, and the second row displays the laplacian stacks, both from level 0 to 4.

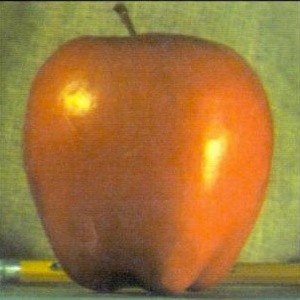

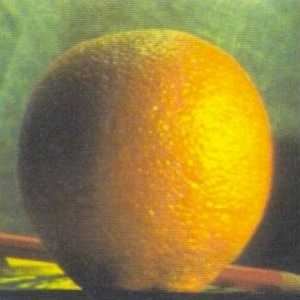

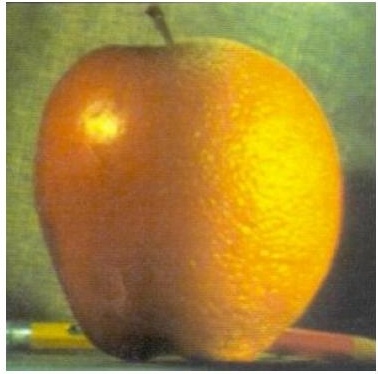

Here, we utilize results from the previous section to implement multiresolution blending. This approach follows the ideas presented in Burt and Adelson’s 1983 paper. To blend two images, we first create Gaussian and Laplacian stacks for both input images (e.g., the apple and orange) and for the blending mask. Using the stacks, we blend the images by combining their Laplacian levels, guided by the Gaussian-blurred mask at each level. This results in a seamless blend where the transition between the two images is smooth and natural (though a part of the natural transition requires the two images being a plausible combination). After creating the blended Laplacian stack, we reconstruct the final blended image by adding the levels back together, starting from the highest to the lowest.

Blending using irregular masks is more difficult but similar in essence. For the result below, I used an external tool to crop out the image I want to blend and convert it to pure binary (i.e., mask).

Overall, a key takeaway is the role of Gaussian blur and frequency in image processing (especially blending). By applying Gaussian filters, we can effectively highlight the key components of an image, which can then help us extract the information for edge detection. Furthermore, we can effectively smooth images, extract high-frequency edges, and create seamless transitions between images in tasks like hybrid image creation and multiresolution blending. It is through this project that I better understand the essence of image in digital representation – that they are just numbers, as Prof. Efros mentioned in the introductory lecture. During the process of work, a significant amount of time was allocated to adjusting the parameters (e.g., channels, resolution, dimension, frequency, kernel, standard deviation (of Gaussian), etc.), as a change to any of them may cause the whole pipeline to produce a completely different result.